High-Quality Software Engineering ¶

Copyright © 2005-2007 David Drysdale

Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled "GNU Free Documentation License".

Table of Contents

- 1 Introduction

- 2 Requirements

- 3 Design

- 4 Code

- 5 Code Review

- 6 Test

- 7 Support

- 8 Planning a Project

- 9 Running a Project

- Index

1 Introduction ¶

Software is notorious for its poor quality. Buggy code, inconvenient interfaces and missing features are almost expected by the users of most modern software.

Software development is also notorious for its unreliability. The industry abounds with tales of missed deadlines, death march projects and huge cost overruns.

This book is about how to avoid these failures. It’s about the whole process of software engineering, not just the details of designing and writing code: how to build a team, how to plan a project, how to run a support organization.

There are plenty of other books out there on these subjects, and indeed many of the ideas in this one are similar to those propounded elsewhere.

However, this book is written from the background of a development sector where software quality really matters. Networking software and device level software often need to run on machines that are unattended for months or years at a time. The prototypical examples of these kind of devices are the big phone or network switches that sit quietly in a back room somewhere and just work. These devices often have very high reliability requirements: the “six-nines” of the subtitle.

“Six-nines” is the common way of referring to a system that must have 99.9999% availability. Pausing to do some sums, this means that the system can only be out of action for a total of 32 seconds in a year. Five-nines (99.999% availability) is another common reliability level; a five-nines system can only be down for around 5 minutes in a year.

When you stop to think about it, that’s an incredibly high level of availability. The average light bulb probably doesn’t reach five-nines reliability (depending on how long it takes you to get out a stepladder), and that’s just a simple piece of wire. Most people are lucky if their car reaches two-nines reliability (three and a half days off the road per year). Telephone switching software is pretty complex stuff, and yet it manages to hit these quality levels (when did your regular old telephone last fail to work?).

| Reliability Level | Uptime Percentage | Downtime per year |

|---|---|---|

| Two-nines | 99% | 3.5 days |

| Three-nines | 99.9% | 9 hours |

| Four-nines | 99.99% | 53 minutes |

| Five-nines | 99.999% | 5 minutes |

| Six-nines | 99.9999% | 31 seconds |

Table 1.1: Allowed downtimes for different reliability levels

Writing software that meets these kinds of reliability requirements is a tough challenge, and one that the software industry in general would be very hard pressed to meet.

This book is about meeting this challenge; it’s about the techniques and trade-offs that are worthwhile when hitting these levels of software quality. This goes far beyond just getting the programmers to write better code; it involves planning, it involves testing, it involves teamwork and management—it involves the whole process of software development.

All of the steps involved in building higher quality software have a cost.

Lots of these techniques and recommendations also apply outside of this particular development sector. Many of them are not as onerous or inefficient as you might think (particularly when all of the long term development and support costs are properly factored in), and many of them are the same pieces of advice that show up in lots of software engineering books (except that here we really mean it).

There are some things that are different about this development sector, though. The main thing is that software quality is taken seriously by everyone involved:

- The customers are willing to pay more for it, and are willing to put in the effort to ensure that their requirements and specification are clear, coherent and complete.

- The sales force emphasize it and can use it to win sales even against substantially lower-priced competitors.

- Management are willing to pay for the added development costs and slower time-to-market.

- Management are willing to invest in long-term development of their staff so that they become skilled enough to achieve true software quality.

- The programmers can take the time to do things right.

- The support phase is taken seriously, rather than being left as chore for less-skilled staff to take on.

Taken together, this means that it’s worthwhile to invest in the architecture and infrastructure for quality software development, and to put the advice from software engineering books into practice—all the time, every time.

It’s also possible to do this in a much more predictable and repeatable way than with many areas of software development—largely because of the emphasis on accurate specifications (see Waterfall versus Agile).

Of course, there are some aspects of software development that this book doesn’t cover. The obvious example is user interfaces—the kind of software that runs for a year without crashing is also the kind of software that rarely has to deal with unpredictable humans (and unreliable UI libraries). However, there are plenty of other places to pick up tips on these topics that I skip1.

1.1 Intended Audience ¶

1.1.1 New Software Engineers ¶

One of the aims of this book is to cover the things I wish that I’d known when I first started work as a professional software engineer working on networking software. It’s the distillation of a lot of good advice that I received along the way, together with lessons learnt through bitter experience. It’s the set of things that I’ve found myself explaining over the years to both junior software developers and to developers who were new to carrier-class networking software—so a large fraction of the intended audience is exactly those software developers.

There’s a distinction here between the low-level details of programming, and software engineering: the whole process of building and shipping software. The details of programming—algorithms, data structures, debugging hints, modelling systems, language gotchas—are often very specific to the particular project and programming environment (hence the huge range of the O’Reilly library). Bright young programmers fresh from college often have significant programming skills, and easily pick up many more specifics of the systems that they work on. Similarly, developers moving into this development sector obviously bring significant skill sets with them.

Planning, estimating, architecting, designing, tracking, testing, delivering and supporting software are as important as stellar coding skills.

This book aims to help with this understanding, in a way that’s mostly agnostic about the particular software technologies and development methodologies in use. Many of the same ideas show up in discussions of functional programming, object-oriented programming, agile development, extreme programming etc., and the important thing is to understand

- why they’re good ideas

- when they’re appropriate and when they’re not appropriate (which involves understanding why they’re good ideas)

- how to put them into practice.

It’s not completely agnostic, though, because our general emphasis on high-quality software engineering means that some things are unlikely to be appropriate. An overall system is only as reliable as its least reliable part, and so there’s no point in trying to write six-nines Visual Basic for Excel—the teetering pyramid of Excel, Windows and even PC hardware is unlikely to support the concept.

1.1.2 Software Team Leaders ¶

Of course, if someone had given me all of this advice about quality software development, all at once, when I started as a software developer, I wouldn’t have listened to it.

Instead, all of this advice trickled in over my first few years as a software engineer, reiterated by a succession of team leaders and reinforced by experience. In time I moved into the position of being the team leader myself—and it was now my turn to trickle these pieces of advice into the teams I worked with.

A recurring theme (see Developing the Developers) of this book is that the quality of the software depends heavily on the calibre of the software team, and so improving this calibre this is an important part of the job of a team leader.

The software team leader has a lot of things to worry about as part of the software development process. The team members can mostly concentrate on the code itself (designing it, writing it, testing it, fixing it), but the team leader needs to deal with many other things too: adjusting the plan and the schedule so the release date gets hit, mollifying irate or unreasonable customers, persuading the internal systems folk to install a better spam filter, gently soothing the ego of a prima-donna programmer and so on.

Thus, the second intended audience for this book is software team leaders, particularly those who are either new to team leading or new to higher quality software projects. Hopefully, anyone in this situation will find that a lot of the lower-level advice in this book is just a codification of what they already know—but a codification that is a useful reference when working with their team (particularly the members of their team who are fresh from college). There are also a number of sections that are specific to the whole business of running a software project, which should be useful to these new team leaders.

At this point it’s worth stopping to clarify exactly what I mean by a software team leader—different companies and environments use different job names, and divide the responsibilities for roles differently. Here, a team leader is someone who has many of the following responsibilities (but not necessarily all of them).

- Designer of the overall software system, dividing the problem into the relevant subcomponents.

- Assigner of tasks to programmers.

- Generator and tracker of technical issues and worries that might affect the success of the project.

- Main answerer of technical questions (the team “guru”), including knowing who best to redirect the question to when there’s no immediate answer.

- Tracker of project status, including reporting on that status to higher levels of management.

- Trainer or mentor of less-experienced developers, including assessment of their skills, capabilities and progress.

1.2 Common Themes ¶

A number of common themes recur throughout this book. These themes are aspects of software development that are very important for producing the kind of high-quality software that hits six-nines reliability. They’re very useful ideas for other kinds of software too, but are rarely emphasized in the software engineering literature.

1.2.1 Maintainability ¶

Maintainability is all about making software that is easy to modify later, and is an aspect of software development that is rarely considered. This is absolutely vital for top quality software, and is valuable elsewhere too—there are very few pieces of software that don’t get modified after version 1.0 ships, and so planning for this later modification makes sense over the long term.

This book tries to bring out as many aspects of maintainability as possible. Tracking the numbers involved—development time spent improving maintainability, number of bugs reported, time taken to fix bugs, number of follow-on bugs induced by rushed bug fixes—can quickly show the tangible benefits of concentrating on this area.

Maintainability is good design.

Maintainability is communication.

All of this information needs to be communicated from the people who understand it in the first place—the original designers and coders—to the people who have to understand it later—the programmers who are developing, supporting, fixing and extending the code.

Some of this communication appears as documentation, in the form of specifications, design documents, scalability analyses, stored email discussions on design decisions etc. A lot of this communication forms a part of the source code, in the form of comments, identifier names and even directory and file structures.

1.2.2 Knowing Reasons Why ¶

Building a large, complex software system involves a lot of people making a lot of decisions along the way. For each of those decisions, there can be a range of possibilities together with a range of factors for and against each possibility. To make good decisions, it’s important to know as much as possible about these factors.

The long-term quality of the codebase often forces painful or awkward decisions in the short and medium term.

Scott Meyers has written a series of books about C++, which are very highly regarded and which deservedly sell like hot cakes. A key factor in the success of these books is that he builds a collection of rules of thumb for C++ coding, but he makes sure that the reader understands the reasons for the rule. That way, they will be able to make an informed decision if they’re in the rare scenario where other considerations overrule the reasons for a particular rule.

In such a scenario, the software developer can then come up with other ways to avoid the problems that led to the original rule. To take a concrete example, global variables are often discouraged because they:

- pollute the global namespace

- have no access control (and so can be modified by any code)

- implicitly couple together widely separated areas of the code

- aren’t inherently thread safe.

Replacing a global variable with a straightforward Singleton design pattern2 counteracts a) and b). Knowing the full list allows d) to be dealt with separately by adding locking and leaves c) as a remaining issue to watch out for.

1.2.3 Developing the Developers ¶

The best developers can be better than the average developer by an order of magnitude, and infinitely better than the worst developer.

This makes it obvious that the single most important factor in producing good software is the calibre of the development team producing it.

A lot of this is innate talent, and this means that recruitment and retention is incredibly important. The other part is to ensure that the developers you’ve already got are fulfilling all of their potential.

Programmers are normally pretty bright people, and bright people like to learn. Giving them the opportunity to learn is good for everyone: the programmers are happier (and so more likely to stick around), the software becomes higher quality, and the programmers are more effective on future projects because they’re more skilled.

It’s important to inculcate good habits into new programmers; they should be encouraged to develop into being the highest quality engineers they can be, building the highest quality software they can. This book aims to help this process by distilling some of the key ideas involved in developing top quality software.

All of the skills needed to write great code are not enough; the most effective software engineers also develop skills outside the purely technical arena. Communicating with customers, writing coherent documentation, understanding the constraints of the overall project, accurately estimating and tracking tasks—all of these skills improve the chances that the software will be successful and of top quality.

1.3 Book Structure ¶

The first part of this book covers the different phases of software development, in roughly the order that they normally occur:

- Requirements: What to build.

- Design: How to build it.

- Code: Building it.

- Test: Checking it got built right.

- Support: Coping when the customers discover parts that (they think) weren’t built right.

Depending on the size of the project and the particular software development methodology (e.g. waterfall vs. iterative), this cycle can range in size from hours or days to years, and the order of steps can sometimes vary (e.g. writing tests before the code) but the same principles apply regardless. As it happens, high quality software usually gets developed using the apparently old-fashioned waterfall approach—more on the reasons for this in the Requirements chapter.

Within this set of steps of a development cycle, I’ve also included a chapter specifically on code reviews (see Code Review). Although there are plenty of books and articles that help programmers improve their coding skills, and even though the idea of code reviews is often recommended, there is a dearth of information on why and how to go about a code review.

The second part of the book takes a step back to cover more about the whole process of running a high quality software development project. This covers the mechanics of planning and tracking a project (in particular, how to work towards more accurate estimation) to help ensure that the software development process is as high quality as the software itself.

2 Requirements ¶

What is it that the customer actually wants? What is the software supposed to do? This is what requirements are all about—figuring out what it is you’re supposed to build.

The whole business of requirements is one of the most common reasons for software projects to turn into disastrous failures.

So why should it be so hard? First up, there are plenty of situations where the software team really doesn’t know what the customer wants. It might be some new software idea that’s not been done before, so it’s not clear what a potential customer will focus on. It might be that the customer contact who generates the requirements doesn’t really understand the low-level details of what the software will be used for. It might just be that until the user starts using the code in anger, they don’t realize how awkward some parts of the software are.

The requirements describe what the customer wants to achieve, and the specification details how the software is supposed to help them achieve it.

NWAS: Not Working, As Specified.

For fresh-faced new developers, this can all come as a bit of a shock. Programming assignments on computer science courses all have extremely well-defined requirements; open-source projects (which are the other kind of software that they’re likely to have been exposed to) normally have programmers who are also customers for the software, and so the requirements are implicitly clear.

This chapter is all about this requirements problem, starting with a discussion of why it is (or should be) less of an issue for true six-nines software systems.

2.1 Waterfall versus Agile ¶

Get your head out of your inbred development sector and look around 4.

Here’s a little-known secret: most six-nines reliability software projects are developed using a waterfall methodology. For example the telephone system and the Internet are both fundamentally grounded on software developed using a waterfall methodology. This comes as a bit of surprise to many software engineers, particularly those who are convinced of the effectiveness of more modern software development methods (such as agile programming).

Before we explore why this is the case, let’s step back for a moment and consider exactly what’s meant by “waterfall” and “agile” methodologies.

The waterfall methodology for developing software involves a well-defined sequence of steps, all of which are planned in advance and executed in order. Gather requirements, specify the behaviour, design the software, generate the code, test it and then ship it (and then support it after it’s been shipped).

An agile methodology tries to emphasize an adaptive rather than a predictive approach, with shorter (weeks not months or years), iterated development cycles that can respond to feedback on the previous iterations. This helps with the core problem of requirements discussed at the beginning of this chapter: when the customer sees an early iteration of the code, they can physically point to the things that aren’t right, and the next iteration of the project can correct them.

The Extreme Programming (XP) variant of this methodology involves a more continuous approach to this problem of miscommunication of requirements: having the customer on-site with the development team (let’s call this the customer avatar). In other words: if interaction between the developers and the customer is a Good Thing, let’s take it to its Extreme.

So why do most high-quality software projects stick to the comparatively old-fashioned and somewhat derided waterfall approach?

The key factor that triggers this is that six-nines projects don’t normally fall into the requirements trap. These kind of projects typically involve chunks of code that run in a back room—behind a curtain, as it were. The external interfaces to the code are binary interfaces to other pieces of code, not graphical interfaces to fallible human beings. The customers requesting the project are likely to include other software engineers. But most of all, the requirements document is likely to be a reference to a fixed, well-defined specification.

For example, the specification for a network router is likely to be a collection of IETF RFC5 documents that describe the protocols that the router should implement; between them, these RFCs will specify the vast majority of the behaviour of the code. Similarly, a telephone switch implements a large collection of standard specifications for telephony protocols, and the fibre-optic world has its own collection of large standards documents. A customer requirement for, say, OSPF routing functionality translates into specification that consists of RFC2328, RFC1850 and RFC2370.

So, a well-defined, stable specification allows the rest of the waterfall approach to proceed smoothly, as long as the team implementing the software is capable of properly planning the project (see Planning a Project), designing the system (see Design), generating the code (see Code) and testing it properly (see Test).

2.2 Use Cases ¶

Most people find it easier to deal with concrete scenarios than with the kinds of abstract descriptions that make their way into requirements documents. This means that it’s important to build some use cases to clarify and confirm what’s actually needed.

A use case is a description of what the software and its user does in a particular scenario. The most important use cases are the ones that correspond to the operations that will be most common in the finished software—for example, adding, accessing, modifying and deleting entries in data-driven application (sometimes known as CRUD: create, read, update, delete), or setting up and tearing down connections in a networking stack.

It’s also important to include use cases that describe the behaviour in important error paths. What happens to the credit card transaction if the user’s Internet connection dies halfway through? What happens if the disk is full when the user wants to save the document they’ve worked on for the last four hours? Asking the customer these kinds of “What If?” questions can reveal a lot about their implicit assumptions for the behaviour of the code.

Use case scenarios describe what the customer expects to happen; testing confirms that this is indeed what does happen.

2.3 Implicit Requirements ¶

The use cases described in the previous section are important, but for larger systems there are normally also a number of requirements that are harder to distil down into individual scenarios.

These requirements relate to the average behaviour of the system over a (large) number of different iterations of various scenarios:

- Speed: How fast does the software react? How does this change as more data is included?

- Size: How much disk space and memory is needed for the software and its data?

- Resilience: How well does the system cope with network delays, hardware problems, operating system errors?

- Reliability: How many bugs are expected to show up in the system as it gets used? How well should the system cope with bugs in itself?

- Support: How fast do the bugs need to get fixed? What downtime is required when fixes get rolled out?

For six-nines software, these kinds of factors are often explicitly included in the requirements—after all, the phrase “six-nines” is itself an resilience and reliability requirement. Even so, sometimes the customer has some implicit assumptions about them that don’t make it as far as the spec (particularly for the later items on the list above). The implicit assumptions are driven by the type of software being built—an e-commerce web server farm will just be assumed to be more resilient than a web page applet.

Even when these factors are included into the requirements (typically as a Service Level Agreement or SLA), it can be difficult to put accurate or realistic numbers against each factor. This may itself induce another implicit requirement for the system—to build in a way of generating the quantitative data that is needed for tracking. An example might be to include code to measure and track response times, or to include code to make debugging problems easier and swifter (see Diagnostics). In general, SLA factors are much easier to deal with in situations where there is existing software and tracking systems to compare the new software with.

3 Design ¶

The process of making a software product is sometimes compared to the process of making a building. This comparison is sometimes made to illustrate how amateurish and unreliable software engineering is in comparison to civil engineering, with the aim of improving the former by learning lessons from the latter.

However, more alert commentators point out that the business of putting up buildings is only reliable and predictable when the buildings are the same as ones that have been done before. Look a little deeper, into building projects that are the first of their kind, and the industry’s reputation for cost overruns and schedule misses starts to look comparable with that of the software industry.

A new piece of software is almost always doing something new, that hasn’t been done in exactly that way before. After all, if it were exactly the same as an existing piece of software, we could just reuse that software—unlike buildings, it’s easy to copy the contents of a hard drive full of code.

There is only one technique that can tame the difficulty of designing large, complex systems: divide and conquer.

Tractable for the humans building the system, that is. This process of subdivision is much more about making the system comprehensible for its designers and builders, than about making the compiler and the microprocessor able to deal with the code. The underlying hardware can cope with any amount of spaghetti code; it’s just the programmers that can’t cope with trying to build a stable, solid system out of spaghetti. Once a system reaches a certain critical mass, there’s no way that the developers can hold all of the system in their heads at once without some of the spaghetti sliding out of their ears6.

Software design is all about this process of dividing a problem into the appropriate smaller chunks, with well-defined, understandable interfaces between the chunks. Sometimes these chunks will be distinct executable files, running on distinct machines and communicating over a network. Sometimes these chunks will be objects that are distinct instances of different classes, communicating via method calls. In every case, the chunks are small enough that a developer can hold all of a chunk in their head at once—or can hold the interface to the chunk in their head so they don’t need to understand the internals of it.

Outside of the six-nines world of servers running in a back room, it’s often important to remember a chunk which significantly affects the design, but which the design has less ability to affect: the User. The design doesn’t describe the internals of the user (that would biology, not engineering) but it does need to cover the interface to the user,

This chapter discusses the principles behind good software design—where “good” means a design that has the highest chance of working correctly, being low on bugs, being easy to extend in the future, and implemented in the expected timeframe. It also concentrates on the specific challenges that face the designers of highly resilient, scalable software.

Before moving on to the rest of the chapter, a quick note on terminology. Many specific methodologies for software development have precise meanings for terms like “object” and “component”; in keeping with the methodology-agnostic approach of this book, these terms (and others, like “chunk”) are used imprecisely here. At the level of discussion in this chapter, if the specific difference between an object and a component matters, you’re probably up to no good.

3.1 Interfaces and Implementations ¶

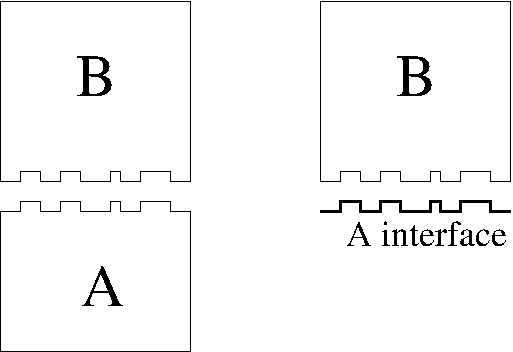

The most important aspect of the division of a problem into individual components is the separation between the interface and the implementation of each component.

The interface to a component is built up of the operations that the component can perform, together with the information that other components (and their programmers) need to know in order to successfully use those operations. For the outermost layer of the software, the interface may well be the User Interface, where the same principle applies—the interface is built up of the operations that the user can perform with the keyboard and mouse—clicking buttons, moving sliders and typing command-line options.

As ever, the definition of the “interface” to a component or an object can vary considerably. Sometimes the interface is used to just mean the set of public methods of an object; sometimes it is more comprehensive and includes pre-conditions and post-conditions (as in Design By Contract) or performance guarantees (such as for the C++ STL); sometimes it includes aspects that are only relevant to the programmers, not the compiler (such as naming conventions7). Here, we use the term widely to include all of these variants—including more nebulous aspects, such as comments that hint on optimal use of the interface.

The implementation of a component is of course the chunk of software the fulfils the interface. This typically involves both code and data; as Niklaus Wirth has observed, “Algorithms + Data Structures = Programs”.

If interface and implementation aren’t distinct, none of the other parts of the system can just use the component as a building block.

3.1.1 Good Interface Design ¶

So, what makes a good interface for a software component?

The interface to a software component is there to let other chunks of software use the component. As such, a good interface is one that makes this easier, and a bad interface is one that makes this harder. This is particularly important in the exceptional case that the interface is to the user rather than another piece of software.

The interface also provides an abstraction of what the internal implementation of the component does. Again, a good interface makes this internal implementation easier rather than harder; however, this goal is often at odds with the previous goal.

There are several principles that help to make an interface optimal for its clients—where the “client” of an interface includes both the other chunks of code that use the interface, and the programmers that write this code.

- Clear Responsibility. Each chunk of code should have a particular job to do, and this job should boil down to a single clear responsibility (see Component Responsibility).

-

Completeness. It should be possible to perform any operation that falls under the responsibility of

this component. Even if no other component in the design is actually going to use this particular operation,

it’s still worth sketching out the interface for it. This provides reassurance that it will be possible to

extend the implementation to that operation in the future, and also helps to confirm that the component’s

particular responsibilities are clear.

Write a component’s interface relative to the expectations of its clients.

- Principle of Least Astonishment. The interface to a component needs to be written relative to the expectations of the clients—who should be assumed to know nothing about the internals of the component. This includes expectations about resource ownership (whose job is to release resources?), error handling (what exceptions are thrown?), and even cosmetic details such as conformance to standard naming conventions and terminology for the local environment.

To ensure that an interface makes the implementation as easy as possible, it needs to be minimal. The interface should provide all the operations that its clients might need (see Completeness above), but no more. The principle of having clear responsibilities (see above) can help to spot areas of an interface that are actually peripheral to the core responsibility of a component—and so should be hived off to a separate chunk of code.

For example, imagine a function that builds a collection of information describing a person (probably a

function like Person::Person in C++ terms), including a date of birth. The chances are (sadly) that

there are likely to be many different ways of specifying this date—should the function cope with all of

them, in different variants, in order to be as helpful as possible for the clients of the function?

In this case, the answer is no. The core responsibility of the function is to build up data about people, not to do date conversion. A much better approach is to pick one date format for the interface, and separate out all of the date conversion code into a separate chunk of code. This separate chunk has one job to do—converting dates—and is very likely to be useful elsewhere in the overall system.

3.1.2 Black Box Principle ¶

The client of a component shouldn’t have to care how that component is implemented. In fact, it shouldn’t even know how the component is implemented—that way, there’s no temptation to rely on internal implementation details.

This is the black box principle. Clients of a component should treat it as if it were a black box with a bunch of buttons and controls on the outside; the only way to get it to do anything is by frobbing these externally visible knobs. The component itself needs to make enough dials and gauges visible so that its clients can use it effectively, but no more than that.

Is it possible to come up with a completely different implementation that still satisfies the interface?

3.1.3 Physical Architecture ¶

Physical architecture is all the things needed to go from source code to a running system.

This is often an area that’s taken for granted; for a desktop application, the physical architecture is just likely to involve decisions about which directories to install code into, how to divide the code up into different shared libraries, and which operating systems to support.

For six-nines software, however, these kinds of physical factors are typically much more important in the design of such systems:

- They often combine hardware and software together as a coherent system, which can involve much lower-level considerations about what code runs where, and when.

- They often have scalability requirements that necessitate running on multiple machines in parallel—which immediately induces problems with keeping data synchronized properly.

- They are often large-scale software systems where the sheer weight of code causes problems for the toolchains. A build cycle that takes a week to run can seriously impact development productivity8.

- The reliability requirements often mean that the code has to transparently cope with software and hardware failures, and even with modifications of the running software while it’s running.

For these environments, each software component’s interface to the non-software parts of the system becomes important enough to require detailed design consideration—and the interfaces between software components may have hardware issues to consider too.

To achieve six-nines reliability, every component of the system has to reach that reliability level.

3.2 Designing for the Future ¶

Successful software has a long lifetime; if version 1.0 works well and sells, then there will be a version 2.0 and a version 8.1 and so on. As such, it makes sense to plan for success by ensuring that the software is designed with future enhancements in mind.

Design in the future tense: all manner of enhancements Should Just Work.

3.2.1 Component Responsibility ¶

Most importantly, what concept does the component correspond to?

When a new aspect of functionality is needed, the designer can look at the responsibilities of the existing components. If the new function fits under a particular component’s aegis, then that’s where the new code will be implemented. If no component seems relevant, then a new component may well be needed.

For example, the Model-View-Controller architectural design pattern is a very well-known example of this (albeit from outside the world of six-nines development). Roughly:

- The Model is responsible for holding and manipulating data.

- The View is responsible for displaying data to the user.

- The Controller is responsible for converting user input to changes in the underlying data held by the Model, or to changes in how it is displayed by the View.

For any change to the functionality, this division of responsibilities usually makes it very clear where the change should go.

3.2.2 Minimizing Special Cases ¶

Lou Montulli: “I laughed heartily as I got questions from one of my former employees about FTP code that he was rewriting. It had taken 3 years of tuning to get code that could read the 60 different types of FTP servers, those 5000 lines of code may have looked ugly, but at least they worked.”

A special case in code is an interruption to the clear and logical progression of the code. A prototypical

toy example of this might be a function that returns the number of days in a month: what does it return for

February? All of sudden, a straightforward array-lookup from month to length won’t work; the code needs to

take the year as an input and include an if (month == February) arm that deals with leap years.

An obvious way to spot special cases is by the presence of the words “except” or “unless” in a description of some code: this function does X except when Y.

Special cases are an important part of software development.

However, special cases are distressing for the design purist. Each special case muddies the responsibilities of components, makes the interface less clear, makes the code less efficient and increases the chances of bugs creeping in between the edge cases.

So, accepting that special cases are unavoidable and important, how can software be designed to minimize their impact?

An important observation is that special cases tend to accumulate as software passes through multiple versions. Version 1.0 might have only had three special cases for interoperating with old web server software, but by version 3.4 there are dozens of similar hacks.

Generalize and encapsulate special cases.

if (month == February) and if (IsMonthOfVaryingLength(month)) (although this isn’t the best

example to use, since there are unlikely to be any changes in month lengths any time soon).

3.2.3 Scalability ¶

Successful software has a long lifetime (see Designing for the Future), as new functionality is added and higher version numbers get shipped out of the door. However, successful software is also popular software, and often the reason for later releases is to cope with the consequences of that popularity.

This is particularly relevant for server-side applications—code that runs on backend web servers, clusters or even mainframes. The first version may be a single-threaded single process running on such a server. As the software becomes more popular, this approach isn’t able to keep up with the demand.

So the next versions of the software may need to become multithreaded (but see Multithreading), or have multiple instances of the program running, to take advantage of a multiprocessor system. This requires synchronization, to ensure that shared data never gets corrupted. In one process or on one machine, this can use the synchronization mechanisms provided by the environment: semaphores and mutexes, file locks and shared memory.

Sharing data between machines is harder because there are fewer synchronization primitives available.

Let’s suppose the software is more successful still, and customers are now relying on the system 24 hours a day, 7 days a week—so we’re now firmly in the category of four-nines, five-nines or even six-nines software. What happens if a fuse blows in the middle of a transaction, and one of the machines comes down? The system needs to be fault tolerant, failing the transaction smoothly over to another machine.

The system also needs to cope with a dynamic software downgrade to roll the new version back to the drawing board.

A few of these evolutionary steps can apply to single-user desktop applications too. Once lots of versions of a product have been released, it’s all too easy to have odd interactions between incompatible versions of code installed at the same time (so-called “DLL Hell”). Similarly, power users can stretch the performance of an application and wonder why their dual-CPU desktop isn’t giving them any benefits9.

Having a good design in the first case can make the whole scary scalability evolution described above go much more smoothly than it would otherwise do—and is essential for six-nines systems where these scalability requirements are needed even in the 1.0 version of the product.

Because this kind of scaling-up is so important in six-nines systems, a whole section later in this chapter (see Scaling Up) is devoted to the kinds of techniques and design principles that help to achieve this. For the most part, these are simple slants to a design, which involve minimal adjustments in the early versions of a piece of software but which can reap huge rewards if and when the system begins to scale up massively.

As with software specifications (see Waterfall versus Agile), it’s worth contrasting this approach with the current recommendations in other software development sectors. The Extreme Programming world has a common precept that contradicts this section: You Aren’t Going to Need It (YAGNI). The recommendation here is not to spend much time ensuring that your code will cope with theoretical future enhancements: code as well as possible for today’s problems, and sort out tomorrow’s problems when they arrive—since they will probably be different from what you expected when they do arrive.

For six-nines systems, the difference between the two approaches is again driven by the firmness of the specification. Such projects are generally much more predictable overall, and this includes a much better chance of correctly predicting the ways that the software is likely to evolve. Moreover, for large and complex systems the kinds of change described in this section are extraordinarily difficult to retro-fit to software that has not been designed to allow for them—for example, physically dividing up software between different machines means that the interfaces have to change from being synchronous to asynchronous (see Asynchronicity).

So, (as ever) it’s a balance of factors: for high-resilience systems, the probability of correctly predicting the future enhancements is higher, and the cost of not planning for the enhancements is much higher, so it makes sense to plan ahead.

3.2.4 Diagnostics ¶

Previous sections have described design influences arising from planning for success; it’s also important to plan for failure.

It’s important to plan for failure: all software systems have bugs.

In addition to bugs in the software, there are any number of other factors that can stop software from working correctly out in the field. The user upgrades their underlying operating system to an incompatible version; the network cable gets pulled out; the hard disk fills up, and so on.

Dealing with these kinds of circumstances is much easier if some thought has been put into the issue as part of the design process. It’s straightforward to build in a logging and tracing facility as part of the original software development; retrofitting it after the fact is much more difficult. This diagnostic functionality can be useful even before the software hits the field—the testing phase (see Test) can also take advantage of it.

You did remember to include a way to back up the data from a running system, didn’t you?

- Installation verification: checking that all the dependencies for the software are installed, and that all versions of the software itself match up correctly.

- Tracing and logging: providing a system whereby diagnostic information is produced, and can be tuned to generate varying amounts of information depending on what appears to be the problem area. This can be particularly useful for debugging crashed code, since core files/crash dumps are not always available.

- Manageability: providing a mechanism for administrators to reset the system, or to prune particularly problematic subsets of the software’s data, aids swift recovery from problems as they happen.

- Data verification: internal checking code that confirms whether chunks of data remain internally consistent and appear to be uncorrupted.

- Batch data processing: providing a mechanism to reload large amounts of data can ease recovery after a disastrous failure (you did remember to include a way to back up the data from a running system, didn’t you?).

3.2.5 Avoiding the Cutting Edge ¶

Programmers are always excited by new toys. Sadly, this extends to software design too: developers often insist on the very latest and shiniest tools, technologies and methodologies.

Using the latest and greatest technology greatly increases the chances that the weakest link will be in code that you have no control over.

In the longer term, working with the very latest in technology is also a wager. Many technologies are tried; few survive past their infancy, which causes problems if your software system relies on something that has had support withdrawn.

It’s not just the toolchain that may have problems supporting the latest technology: similar concerns apply to the developers themselves. Even if the original development team fully understand the new technology, the maintainers of version 1.0 and the developers of version 2.0 might not—and outside experts who can be hired in will be scarce (read: expensive).

Even if the technology in question is proven technology, if the team doing the implementation isn’t familiar with it, then some of the risks of cutting edge tools/techniques apply. In this situation, this isn’t so much a reason to avoid the tools, but instead to ensure that the project plan (see Planning a Project) allows sufficient time and training for the team to get to grips with the technology.

Allowing experimentation means that the next time around, the technology will no longer be untried: the cutting edge has been blunted.

3.3 Scaling Up ¶

An earlier section discussed the importance of planning ahead for scalability. This section describes the principles behind this kind of design for scalability—principles which are essential to achieve truly resilient software.

3.3.1 Good Design ¶

The most obvious aspect of designing for scalability is to ensure that the core design is sound. Nothing highlights potential weak points in a design better that trying to extend it to a fault-tolerant, distributed system.

The first part of this is clarity of interfaces—since it is likely that these interfaces will span distinct machines, distinct processes or distinct threads as the system scales up.

Encapsulating access to data allows changes to the data formats without changing any other components.

Likewise, if all of the data that describes a particular transaction in progress is held together as a coherent chunk, and all access to it is through known, narrow interfaces, then it is easier to monitor that state from a different machine—which can then take over smoothly in the event of failure.

3.3.2 Asynchronicity ¶

A common factor in most of the scalability issues discussed in this chapter is the use of asynchronous interfaces rather than synchronous ones. Asynchronous interfaces are harder to deal with, and they have their own particular pitfalls that the design of the system needs to cope with.

A synchronous interface is one where the results of the interface come back immediately (or apparently immediately). An interface made up of function calls or object method calls is almost always synchronous—the user of the interface calls the function with a number of parameters, and when the function call returns the operation is done.

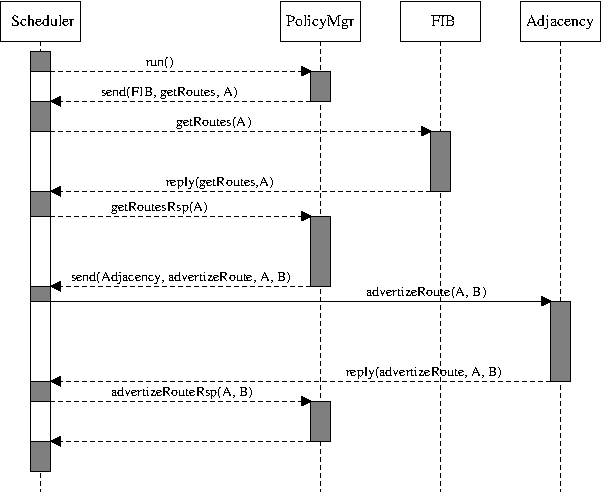

Figure 3.1: Synchronous interaction (as a UML sequence diagram)

An asynchronous interface involves a gap between the invocation and the results. The information that the operation on the interface needs is packaged up and delivered, and some time later the operation takes place and any results are delivered back to the code that uses the interface. This is usually done by encapsulating the interface with some kind of message-passing mechanism.

Results are delivered to a different part of the code, destroying locality of reference.

- There has to be some method for the delivery of the results. This could be the arrival of a message, or an invocation of a registered callback, but in either case the scheduling of this mechanism has to be set up.

- The results are delivered back to a different part of the code rather than just the next line, destroying locality of reference.

-

The code that invokes the asynchronous operation needs to hold on to all of the state information associated

with the operation while it’s waiting for the results.

Results have to be correlated with the information that triggered the asynchronous operation.

- When the results of an operation arrive, they have to be correlated with the information that triggered that operation. What happens if the same operation occurs multiple times in parallel with different data?

- The code has to cope with a variety of timing windows, such as when the answers to different operations return in a different order, or when state needs to be cleaned up because the provider of the interface goes away while operations are pending.

Asynchronous interfaces are ubiquitous in highly resilient systems.

Asynchronicity turns up elsewhere too. Graphical UI systems are usually event driven, and so some operations become asynchronous—for example, when a window needs updating the code calls an “invalidate screen area” method which triggers a “redraw screen area” message some time later. Some very low-level operations can also be asynchronous for performance reasons—for example, if the system can do I/O in parallel, it can be worth using asynchronous I/O operations (the calling code triggers a read from disk into an area of memory, but the read itself is done by a separate chunk of silicon, which notifies the main processor when the operation is complete, some time later). Similarly, if device driver code is running in an interrupt context, time-consuming processing has to be asynchronously deferred to other code so that other interrupts can be serviced quickly.

Designs involving message-passing asynchronicity usually include diagrams that show these message flows, together with variants that illustrate potential problems and timing windows (the slightly more formalized version of this is the UML sequence diagram, see Diagrams).

Figure 3.2: Asynchronous interaction

3.3.3 Fault Tolerance ¶

Fault tolerance: when software or hardware faults occur, there are backup systems available to take up the load.

To deal with the consequences of a fault, it must first be detected. For software faults, this might be as simple as continuously monitoring the current set of processes reported by the operating system; for hardware faults, the detection of faults might be either a feature of the hardware, or a result of a continuous “aliveness” polling. This is heavily dependent on the physical architecture (see Physical Architecture) of the software—knowing exactly where the code is supposed to run, both as processes and as processors.

Of course, the system that is used for detection of faults is itself susceptible to faults—what happens if the monitoring system goes down? To prevent an infinite regress, it’s usually enough to make sure the monitoring system is as simple as possible, and as heavily tested as possible.

Presenting the backup version of the code with the same inputs will probably produce the same output: another software fault.

In the latter case, it’s entirely possible to reach a situation where a particular corrupted set of input data causes the code to bounce backwards and forwards between primary and backup instances ad infinitum10. If this is a possible or probable situation, then the fault tolerance system needs to detect this kind of situation and cope with it—ideally, by deducing what the problematic input is and removing it; more realistically, by raising an alarm for external support to look into.

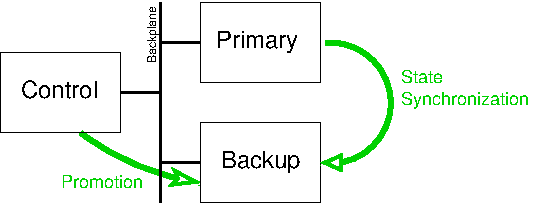

Once a fault has been detected, the fault tolerance system needs to deal with the fault, by activating a backup system so that it becomes the primary instance. This might involve starting a new copy of the code, or promoting an already-running backup copy of the code.

The fault tolerance system needs a mechanism for transferring state information.

The simplest approach for this transfer is to record the state in some external repository—perhaps a hard disk or a database. The newly-promoted code can then read back the state from this repository, and get to work. This simple approach also has the advantage that a single backup system can act as the backup for multiple primary systems—on the assumption that only a single primary is likely to fail at a time (this setup is known as 1:N or N+1 redundancy).

There are two issues with this simple approach. The first is that the problem of a single point of failure has just been pushed to a different place—what happens if the external data repository gets a hardware fault? In practice, this is less of an issue because hardware redundancy for data stores is easily obtained (RAID disk arrays, distributed databases and so on) and because the software for performing data access is simple enough to be very reliable.

The delay as a backup instance comes up to speed is likely to endanger the all-important downtime statistics for the system.

To cope with this issue, the fault tolerance system needs a more continuous and dynamic system for transferring state to the backup instance. In a system like this, the backup instance runs all the time, and state information is continuously synchronized across from the primary to the backup; promotion from backup to primary then happens with just a flick of a switch. This approach obviously involves doubling the number of running instances, with a higher load in both processing and occupancy, but this is part of the cost of achieving high resilience (this setup is known as 1:1 or 1+1 redundancy).

It’s possible for the backup instance to fail.

Figure 3.3: Fault tolerance

The ingredients of a fault tolerance system described so far are reasonably generic. The hardware detection systems and state sychronization mechanisms can all be re-used for different pieces of software. However, each particular piece of software needs to include code that is specific to that particular code’s purpose—the fault tolerance system can provide a transport for state synchronization, but the designers of each particular software product need to decide exactly what state needs to be synchronized, and when.

Deciding what state to replicate and when is an on-going tax on development.

3.3.4 Distribution ¶

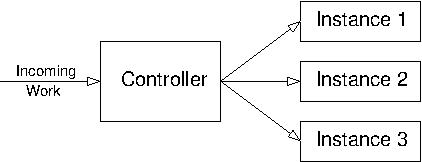

A distributed processing system divides up the processing for a software system across multiple physical locations, so that the same code is running on a number of different processors. This allows the performance of the system to scale up; if the code runs properly on ten machines in parallel, then it can easily be scaled up to run on twenty machines in parallel as the traffic levels rise.

The first step on the way to distributed processing is to run multiple worker threads in parallel in the same process. This approach is very common and although it isn’t technically a distributed system, many of the same design considerations apply.

Multiple threads allow a single process to take better advantage of a multiprocessor machine, and may improve responsiveness (if each individual chunk of work ties up a thread for a long period of time). Each thread is executing the same code, but there also needs to be some controller code that distributes the work among the various worker threads. If the individual worker threads rely on state information that is needed by other threads, then access to that data has to be correctly synchronized (see Multithreading).

A more flexible approach is a message-passing mechanism that synchronizes state information and triggers individual processes to do work.

From a message-passing, multiple process model it’s a very short step to a true distributed system. Instead of the communicating between processes on the same machine, the message passing mechanism now has to communicate between different machines; instead of detecting when a worker process has terminated, the distribution mechanism now needs to detect when worker processes or processors have disappeared.

In all of these approaches, the work for the system has to be divided up among the various instances of the code. For stateless chunks of processing, this can be done in a straightforward round-robin fashion, or with an algorithm that tries to achieve more balanced processing. For processing that changes state information, it’s often important to make sure that later processing for a particular transaction is performed by the same instance of the code that dealt with earlier processing.

A unique way of identifying a transaction can be used to distribute the work in a reproducible way.

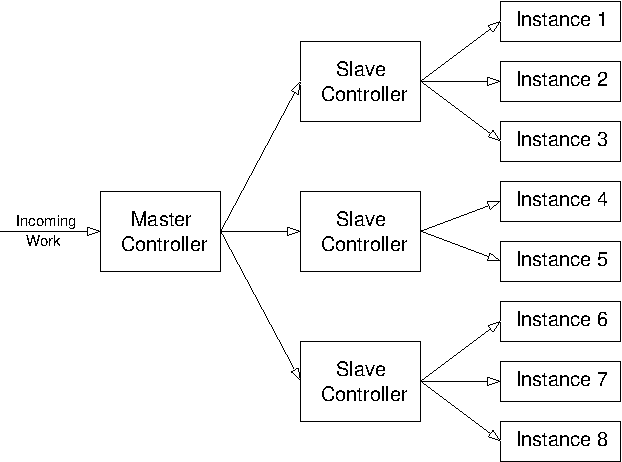

Figure 3.4: Distributed system

As for fault tolerance, note that there is a danger of pushing the original problem back one step. For fault tolerance, where the aim is to cope with single points of failure, the system that implements failover between potential points of failure may itself become a single point of failure. For distribution, where the aim is to avoid performance bottlenecks, the system that implements the distribution may itself become a bottleneck. In practice, however, the processing performed by the distribution controller is usually much less onerous than that performed by the individual instances. If this is not the case, the system may need to become hierarchical.

Figure 3.5: Hierarchical distributed system

3.3.5 Dynamic Software Upgrade ¶

dynamic software upgrade: changing the version of the code while it’s running.

Obviously, performing dynamic software upgrade requires a mechanism for reliably installing and running the new version of the code on the system. This needs some way of identifying the different versions of the code that are available, and connecting to the right version. This also depends on the physical architecture (see Physical Architecture) of the code: how the code is split up into distinct executables, shared libraries and configuration files affects the granularity of the upgrade.

Sadly, it’s common to have to downgrade from a new version to go back to the drawing board.

libc.so.2.2, libc.so.2.3, libc.so.3.0, libc.so.3.1), with symbolic links which

indicate the currently active versions (libc.so.2->libc.so.2.2, libc.so.3->libc.so.3.1,

libc.so->libc.so.3) and which can be instantly swapped between versions. This mechanism needs to go

both ways—sadly, it’s common to have to downgrade from a new version to go back to the drawing board.

However, the difficult part of dynamic software upgrade is not the substitution of the new code for the old code. What is much more difficult is ensuring that the active state for the running old system gets transferred across to the new version.

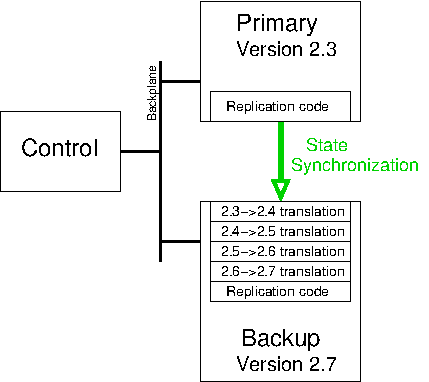

To a first approximation, this is the same problem as for fault tolerance (see Fault Tolerance), and a normal way to implement dynamic software upgrade is to leverage an existing fault tolerant system:

- With a primary instance and backup instance both running version 1.0, bring down the backup instance.

- Upgrade the backup instance to version 1.1.

- Bring the backup instance back online, and wait for state synchronization to complete.

- Explicitly force a failover from the version 1.0 primary instance to the version 1.1 backup instance.

- If the new version 1.1 primary instance is working correctly, upgrade the now-backup version 1.0 instance to also run version 1.1 of the code.

- Bring the second instance of version 1.1 online as the new backup.

The internal semantics of the state synchronization process have to be designed individually for each specific software product.

Figure 3.6: dynamic software upgrade

In practice, this means that any new state information that is added to later versions of the code needs to have a default value and behaviour, to cope with state that’s been dynamically updated from an earlier version of the code. What’s more, the designers of the new feature need to make and implement decisions about what should happen if state that relies on the new feature gets downgraded. In worst-case scenarios, this migration of state may be so difficult that it’s not sensible to implement it—the consequences of the resulting downtime may be less than the costs of writing huge amounts of translation code.

dynamic software upgrade functionality imposes a serious development tax on all future changes to the product’s codebase.

3.4 Communicating the Design ¶

3.4.1 Why and Who ¶

It’s not enough to have a design. That design needs to be communicated to all of the people who are involved in the project, both present and future.

Communicating a design to someone else is a great smoke test for spotting holes in the design.

For a larger system, the design process may well occur in layers. The overall architecture gets refined into a high-level design, whose components are each in turn subject to a low-level design, and then each of the low-level designs gets turned into code. This is the implementation process: each abstract layer is implemented in terms of a lower-level, more concrete layer.

At each stage of this process, the people who are responsible for this implementation process need to understand the design. The chances are that there is more than a single implementer, and that the sum of all of designs in all of the layers is more than will fit in a single implementer’s head.

Therefore the clearer the communication of each layer of the design, the greater chance there is that the next layer down will be implemented well. If one layer is well explained, documented and communicated, the layer below is much more likely to work well and consistently with the rest of the design.

The intended audience for the communication of the design is not just these different layers of implementers of the design. In the future, the code is likely to be supported, maintained and enhanced, and all of the folk who do these tasks can be well assisted by understanding the guiding principles behind the original design.

3.4.2 Diagrams ¶

For asynchronous systems, explicitly include the flow of time as an axis in a diagram.

This isn’t news; in fact, it’s so well known that there is an entire standardized system for depicting software systems and their constituents: the Unified Modeling Language (UML). Having a standard system can obviously save time during design discussions, but an arbitrary boxes-and-lines picture is usually clear enough for most purposes.

Colour can also help when communicating a design diagram—to differentiate between different types of component, or to indicate differing options for the design11.

3.4.3 Documentation ¶

Documenting the design is important because it allows the intent behind the design to be communicated even to developers who aren’t around at the time of the original design. It also allows the higher-level concepts behind the design to be explained without getting bogged down in too many details.

In recent years, many software engineers have begun to include documentation hooks in their source code. A variety of tools (from Doxygen to JavaDoc all the way back to the granddaddy of them all, Donald Knuth’s WEB system) can then mechanically extract interface documentation and so on.

Any documentation is better than nothing, but it’s important not to confuse this sort of thing with real documentation. Book publishers like O’Reilly sell many books that describe software systems for which the source code is freely available—even when that source code includes this kind of documentation hooks. These books are organized and structured to explain the code without getting bogged down in details; they cover the concepts involved, they highlight key scenarios, they include pictures, and they’re still useful even when the software has moved on several versions since the book was published. In other words, they are roughly equivalent to a good design document.

As well as describing how the code is supposed to work, the design document should also describe why it has been designed that way.

- Structure: The text should be well-structured, in order to make it easy to read and review—software developers tend to think about things in a structured way.

- Rationale: As well as describing how the code is supposed to work, the design document should also describe why it has been designed that way. In fact, it’s often useful to discuss other potential approaches and explain why they have not been taken—people often learn from and remember (potential) horror stories. This helps future developers understand what kinds of changes to the design are likely to work, and which are likely to cause unintended consequences.

-

Satisfaction of the requirements: It should be clear from the documentation of the design that the

requirements for the software are actually satisfied by the design. In particular, it’s often worth including

a section in the design that revisits any requirements use cases (see Use Cases), showing how the

internals of the code would work in those scenarios—many people understand things better as a result of

induction (seeing several examples and spotting the patterns involved) than deduction (explaining the code’s

behaviour and its direct consequences).

The passive voice avoids the question of exactly who or what does things.

- Avoidance of the passive voice: The design document describes how things get done. The previous sentence uses the passive voice: “things get done” avoids the question of exactly who or what does things. For a design document to be unambiguous, it should describe exactly which chunk of code performs all of the functionality described.

4 Code ¶

This chapter deals with the code itself. This is a shorter chapter than some, because I’ve tried to avoid repeating too much of the standard advice about how to write good code on a line-by-line basis—in fact, many of the topics of this chapter are really aspects of low-level design rather than pure coding12. However, there are still a few things that are rarely explored and which can be particularly relevant when the aim is to achieve the highest levels of quality.

4.1 Portability ¶

Portability is planning for success; it assumes that the lifetime of the software is going to be longer than the popularity of the current platforms.

There are different levels of portability, depending on how much functionality is assumed to be provided by the environment. This might be as little as a C compiler and a standard C library—or perhaps not even that, given the limitations of some embedded systems (for example, no floating point support). It might be a set of functionality that corresponds to some defined or de facto standard, such as the various incarnations of UNIX. For the software to actually do something, there has to be some kind of assumption about the functionality provided by the environment—perhaps the availability of a reliable database system, or a standard sockets stack.

Encapsulate the interactions between the product code and the environment.

For six-nines software, the interactions between the product code and the environment are often very low-level. Many of these interfaces are standardized—the C library, a sockets library, the POSIX thread library—but they can also be very environment specific; for example, notification mechanisms for hardware failures or back-plane communication mechanisms. Some of these low-level interfaces can be very unusual for programmers who are used to higher level languages. For example, some network processors have two different types of memory: general-purpose memory for control information, and packet buffers for transporting network data. The packet buffers are optimized for moving a whole set of data through the system quickly, but at the expense of making access to individual bytes in a packet slower and more awkward. Taken together, this means that software that may need to be ported to these systems has to carefully distinguish between the two different types of memory.

All of these interfaces between the software and its environment need to be encapsulated, and in generating these encapsulations it’s worth examining more systems than just the target range of portability. For example, when generating an encapsulated interface to a sockets stack for a UNIX-based system, it’s worth checking how the Windows implementation of sockets would fit under the interface. Likewise, when encapsulating the interface to a database system, it’s worth considering a number of potential underlying databases—Oracle, MySQL, DB2, PostgreSQL, etc.—to see whether a small amount of extra effort in the interface would encapsulate a much wider range of underlying implementations (see Black Box Principle). As ever, it’s a balance between an interface that’s a least common denominator that pushes lots of processing up to the product code, and an interface that relies so closely on one vendor’s special features that the product code is effectively locked into that vendor forever.

4.2 Internationalization ¶

Internationalization is the process of ensuring that software can run successfully in a variety of different locales. Locales involve more than just the language used in the user interface; different cultures have different conventions for displaying various kinds of data. For example, 5/6/07 would be interpreted as the 5th June 2007 in the UK, but as 6th May 2007 in the US. Ten billion14 would be written 10,000,000,000 in the US, but as 10,00,00,00,000 in India.

Once software has been internationalized, it can then be localized15 by supplying all of the locale-specific settings for a particular target: translated user interface texts, date and time settings, currency settings and so on.

Internationalization forces the designer to think carefully about the concepts behind some common types of data.

What exactly is a string? Is it conceptually a sequence of bytes or a sequence of characters? If you have to iterate through that sequence, this distinction makes a huge difference. To convert from a concrete sequence of bytes to a logical sequence of characters (or vice versa), the code needs to know the encoding: UTF-8 or ISO-Latin-1 or UCS-2 etc. To convert from a logical sequence of characters to human-readable text on screen, the code needs to have a glyph for every character, which depends on the fonts available for display.

What exactly is a date and time? Is it in some local timezone, or in a fixed well-known timezone? If it’s in a local timezone, does the code incorrectly assume that there are 24 hours in a day or that time always increases (neither assumption is true during daylight-saving time shifts)? If it’s stored in a fixed timezone like UTC16, does the code need to convert it to and from a more friendly local time for the end user? If so, where does the information needed for the conversion come from and what happens when it changes17?

What’s in a name? Dividing a person’s name into “first name” and “last name” isn’t appropriate for many Asian countries, where typical usage has a family name first, followed by a given name. Even with the more useful division into “given name” and “family name”, there are still potential wrinkles: many cultures have gender-specific variants of names, so a brother and sister would not have matching family names. Icelandic directories are organized by given name rather than “family name”, because Iceland uses a patronymic system (where the “family name” is the father’s name followed by “-son” or “-dottir”)—brothers share a family name, but father and son do not.

Being aware of the range of different cultural norms for various kinds of data makes it much more likely that the code will accurately and robustly model the core concepts involved.

4.3 Multithreading ¶

This section concentrates on one specific area of low-level design and coding that can have significant effects on quality: multithreading.

In multithreaded code, multiple threads of execution share access to the same set of memory areas, and they have to ensure that access to that memory is correctly synchronized to prevent corruption of data. Exactly the same considerations apply when multiple processes use explicitly shared memory, although in practice there are fewer problems because access to the shared area is more explicit and deliberate.

Multithreaded code is much harder to write correctly than single-threaded code.

Of course, it is possible to design and code around these difficulties successfully. However, the only way to continue being successful as the size of the software scales up is by imposing some serious discipline on coding practices—rigorously checked conventions on mutex protection for data areas, wrapped locking calls to allow checks for locking hierarchy inversions, well-defined conventions for thread entrypoints and so on.

Even with this level of discipline, multithreading still imposes some serious costs to maintainability. The key aspect of this is unpredictability—multithreaded code devolves the responsibility for scheduling different parts of the code to the operating system, and there is no reliable way of determining in what order different parts of the code will run. This makes it very difficult to build reliable regression tests that exercise the code fully; it also means that when bugs do occur, they are much harder to debug (see Debugging).

So when do the benefits of multithreading outweigh the costs? When is worth using multithreading?

- When there is little or no interaction between different threads. The most common pattern for this is when there are several worker threads that execute the same code path, but that code path includes blocking operations. In this case, the multithreading is essential for the code to perform responsively enough, but the design is effectively single threaded: if the code only ran one thread, it would behave exactly the same (only slower).

- When there are processing-intensive operations that need to be performed. In this case, the most straightforward design may be for the heavy processing to be done in a synchronous way by a dedicated thread, while other threads continue with the other functions of the software and wait for the processing to complete (thus ensuring UI responsiveness, for example).

- When you have to. Sometimes an essential underlying API requires multithreading; this is most common in UI frameworks.

4.4 Coding Standards ¶

Everyone has their own favourite coding convention, and they usually provide explanations of what the benefits of their particular preferences are. These preferences vary widely depending on the programming language and development environment; however, it’s worth stepping back to explore what the benefits are for the whole concept of coding standards.

The first benefit of a common set of conventions across a codebase is that it helps with maintainability. When a developer explores a new area of code, it’s in a format that they’re familiar with, and so it’s ever so slightly easier to read and understand. Standard naming conventions for different types of variables—local, global, object members—can make the intent of a piece of code clearer to the reader.

The next benefit is that it can sometimes help to avoid common errors, by encoding wisdom learnt in previous

developments. For example, in C-like languages it’s often recommended that comparison operations should be

written as if (12 == var) rather than if (var == 12), to avoid the possibility of mistyping and

getting if (var = 12) instead. Another example is that coding standards for portable software often

ban the use of C/C++ unions, since their layout in memory is very implementation-dependent. For

portable software (see Portability) that has to deal with byte flipping issues, a variable naming

convention that indicates the byte ordering can save many bugs18.